How AI-Powered Omnichannel Chatbots Are Transforming HIV Prevention for South African Youth

- FHS Communications

As digital transformation reshapes healthcare globally, artificial intelligence (AI) omnichannel platforms are emerging as critical tools in addressing persistent public health challenges. A study found that adolescent girls and Young women (AGYW), aged between 15 and 24, are twice as likely to be HIV positive – a trend that is observed globally as women and girls of all ages accounted for 44% of all new HIV infections in 2023. South Africa is not different, and innovative digital solutions like the Sister Unathi chatbot are redefining how care is accessed, personalised, and scaled.

Why AGYW?

These figures call for preventive efforts to be targeted at AGYW, who face unique vulnerabilities in the context of HIV, including limited access to healthcare, social stigma, and gender-based violence. Although pre-exposure prophylaxis (PrEP) has been implemented in certain geographical areas in South Africa, it has not slowed down rates in this age group. This Wits RHI project (Project PrEP) has turned to the use of AI to enhance the understanding of PrEP use among AGYW in real-world contexts.

Why AI-Powered Tools?

Today’s young people are particular about how, when, and through which channel they access information, opting for internet-based, mobile technology, and social media. A recent study, Exploring Online Health Information–Seeking Behaviour Among Young Adults, found that they look for: credible content, user-friendly design, tailored language, interactive features, privacy, and inclusivity – characteristics that Wits RHI also identified as a factor that influences their success in reaching AGYW for the HIV prevention programme.

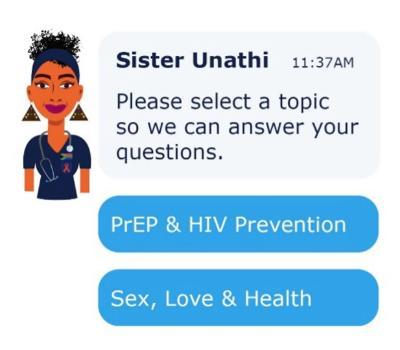

To meet these expectations, the AI-powered omnichannel Sister Unathi chatbot is a digital tool designed to provide discreet, accessible support across multiple platforms that young people already use, including WhatsApp, Facebook Messenger, Instagram, and the MyPrEP website.

Caption: Meet the trusted, professional and non-judgmental Sister Unathi.

Caption: Meet the trusted, professional and non-judgmental Sister Unathi.

“The Sister Unathi chatbot was developed to address information gaps, support decision-making, and link users to HIV prevention and sexual reproductive health services in a confidential, youth-friendly format,” explains Nakita Sheobalak, the Project Manager for Digital Media at Wits RHI.

From NLP to AI

Caption: Image of Sister Unathi’s nurse persona announcing on social media about the omnichannel transition period and the chatbot’s “glow-up”.

Caption: Image of Sister Unathi’s nurse persona announcing on social media about the omnichannel transition period and the chatbot’s “glow-up”.

Sister Unathi answers people’s questions about their sexual health, assists them in making informed health decisions, and refers them to relevant healthcare services.

Caption: Sister Unathi’s NLP character and UX flow.

Caption: Sister Unathi’s NLP character and UX flow.

The chatbot was originally launched as a Natural Language Processing (NLP) chatbot, a subset of AI that interprets user messages using structured rules and keyword matching. Over time, message volumes increased across multiple platforms, and the NLP model, combined with human moderation, became difficult to sustain. The decision to transition to an AI-powered omnichannel version was made to improve automation, reduce pressure on human agents, and enhance the user experience. The AI model enables more responsive, context-aware interactions and allows the chatbot to operate more efficiently across platforms. This transition aimed to support a growing user base with consistent, personalised content and improved service linkage through multiple access points.

The current chatbot can recognise some commonly used youth phrases, local slang and local language depending on the resources provided for the AI to learn from. However, its ability to detect emotional tone is still limited. While certain keywords like “I’m scared”, “I need counselling”, or “I am being abused” can trigger the correct response and helpline referral or escalation to a human agent, the system does not yet have full sentiment analysis to pick up on panic or distress.

Challenges and Lessons Learned

Keyword-based replies posed risks of misinterpretation and missed urgency. The human moderation system struggled to keep up with the volume of incoming messages, especially during peak engagement periods, such as weekends or after working hours between 16h00 and 8h00. “Relying solely on human agents was unsustainable at scale as it required more time, resources, and was costly to operate,” explains Sheobalak.

If the chatbot cannot answer a user’s question, it prompts the user to try rephrasing, offers a list of related topics, and provides the option to escalate the query to a human agent during available hours. In highly sensitive cases, such as when a user expresses suicidal thoughts, escalation depends on either specific trigger words recognised by the system or active intervention by a human moderator. In these instances, the chatbot displays an urgent response message with contact details for national mental health helplines such as SADAG, Lifeline, or LoveLife. However, the chatbot cannot confirm whether the user seeks help or follows through, and this remains a key limitation.

Sheobalak says that programmatic plans are underway to strengthen follow-up on site-level incidents. “Protocols for reporting and responding to online cases of gender-based violence (GBV) or mental health-related distress remain limited and require further development,” she explains. She noted that although mental health and distress-related cases are not the chatbot’s primary focus. Her team aims to follow up as soon as possible in situations where clients report serious concerns, such as negative clinic experiences or mental health challenges.

Research-Backed Technical Features

The platform is informed by a combination of anonymised backend data from the MyPrEP website, social media engagement analytics, and a WhatsApp Business account, as well as publicly available platform insights collected between March 2020 and February 2025 across MyPrEP’s messaging platforms. Personal identifiable information is removed from the data collected through users’ online patterns and interactions – also known as cookies.

“We have an operational review of over 50,000 conversations across these platforms, which revealed instances of delays exceeding 24 hours, especially when questions were not adequately answered,” says Sheobalak.

The findings of the analysed backend data were supported by local literature on mHealth engagement and internal data from Project PrEP, which identified limitations in human-dependent NLP models. Research also provided key insights into user behaviour and the use of NLPs in African public health, highlighting challenges related to data training, contextual understanding, and deployment. Reviews of chatbot applications in healthcare further underscore these limitations, noting that many NLP-based chatbots struggle with limited flexibility, generic responses, and difficulty understanding user context. Sheobalak says that she and her team closely followed studies that discuss user dissatisfaction with chatbot accuracy and NLP performance, particularly in recognising emotional tone and generating appropriate responses, as this helped them design operational systems to manage expected challenges on user trust and engagement.

Impact and Engagement

The Unitaid-funded project helps identify high-demand service areas not yet covered by mobile clinics or accessible public health clinics. It informs targeted content development based on frequently asked questions or misunderstood topics and supports donor reporting through data on reach, engagement, and behaviour.

Caption: Sister Unathi Chat sharing information on how to access MyPrEP mobile clinic

AI-driven Sister Unathi addresses previously identified gaps in digital health interventions for PrEP, SRH, and sexually transmitted infection (STI) services. These include

- Accessibility as the chatbot operates 24/7;

- Scalability with AI-automation supporting high-volume messaging;

- Continuity of care, as the platform sends reminders, follow-ups, and referral messages, and guides users to specific service points; and

- User-centred design, culturally resonant content, and improving relevance for diverse populations.

Regulation of AI-Enhanced Healthcare Services

Just as the use of AI tools presents an opportunity to enhance proficiency, particularly in resource-constrained environments like South Africa’s public healthcare sector, their fast-innovative nature requires equally effective regulatory policy frameworks to guide their ethical use.

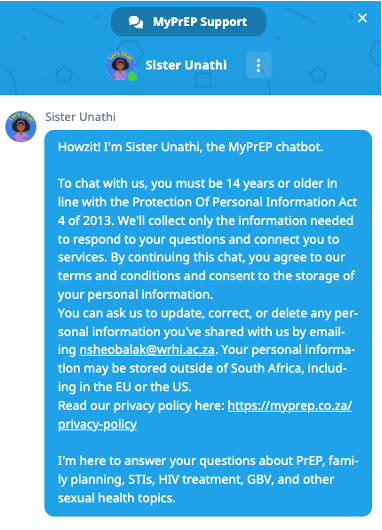

Data privacy, consent, and confidentiality are highly regulated in the practice of healthcare and getting it wrong is not an option. Sheobalak reflects on the extensive process of setting up an AI-powered health chatbot in South Africa, highlighting navigating several legal and data protection requirements as the key hurdles. “…while guidance was provided, it is a lengthy and ongoing process. Our internal legal team guided the process to ensure the chatbot complies with the Protection of Personal Information Act (POPIA), particularly regarding consent and data privacy. Because the chatbot is hosted on Crisp.Chat, a platform based outside South Africa, we had to carefully review their data policies and sign a Data Protection Agreement to ensure user data is handled safely and legally,” she explains.

Caption: Sister Unathi’s POPIA and consent disclosure message that starts the chats across its platforms.

Caption: Sister Unathi’s POPIA and consent disclosure message that starts the chats across its platforms.

She says that although the South African Health Products Regulatory Authority (SAHPRA) released guidance in August 2025 for AI and machine learning‑enabled medical devices, there is currently no comprehensive national framework in South Africa that covers the full scope of AI use in public health programmes. This guidance provides clarity on the classification, safety, performance, and lifecycle controls for regulated medical devices. It does not yet cover broader digital health tools, such as AI-powered chatbots used for health education, referrals, or behaviour change communication. “This creates a grey area when working with international partners, especially around data hosting, intellectual property, and software licensing,” she noted. Despite this, she says her team regularly reviews their systems and follows legal and ethical best practices to keep users safe and their information protected.

Future Outlook

Sister Unathi is part of a broader vision to develop smart, scalable, and youth-friendly digital tools that strengthen HIV prevention, sexual and reproductive health, and overall wellness in South Africa, with potential for wider use.

Currently, the chatbot enables users to ask questions, receive accurate information, and be directed to nearby services. Yet, limitations remain. The goal is to evolve Sister Unathi into a more intelligent and personalised support tool. “We are exploring features such as STI image analysis, real-time geolocation to help users locate nearby services, and better referral tracking to close the loop on service delivery,” says Sheobalak as her team works to enhance timely, relevant, and confidential support.

Sister Unathi is also part of a larger digital ecosystem that has provided young people with trusted, accessible health information for over seven years. This includes the MyPrEP website, a geolocation-based service finder, e-learning tools, and social media platforms. Together, these platforms offer a supportive digital environment that we plan to expand and strengthen.